May 5, 2023

Consistency Models in a Distributed Database

And how to implement them

I was recently working on a project that implements different consistency models for distributed databases and wanted to share how each consistency model is implemented, as well as the advantages certain models have over one another.

There are five main consistency models that I’ll discuss in this post: linearizability, sequential consistency, eventual consistency, sharding, and my personal favorite: causal consistency. For each implementation, I will refer to the underlying database as a key-value store.

Linearizability

Linearizability is the strictest consistency model on the list. The idea behind linearizability is to treat the consistency of a distributed cluster as if all operations were happening on one server: there must be a total order maintained for all operations. To rephrase, each client operation performed on any node in the cluster must follow a linear order. Sadly, this comes at the cost of latency, because concurrent operations are practically not allowed.

Implementation

It takes a lot of coordination between nodes in a cluster to implement a total order of operations. To assist in this, we can use a primary-based approach in which there is one leader with N replicas. This approach is simple, because all operations have to go through the leader node, and this leader node coordinates which replica can continue to serve client requests.

When a replica receives a read request for a key, this request is sent to the master node. The master node maintains a single queue of messages that it has received from all the replicas, and a separate thread is responsible for handling these messages.

After sending the request to the master, the replica node waits until the master node sends it a message informing it that it can continue to process the client’s request. After the client request is processed, the replica sends an acknowledgment message to the master informing it that it has finished processing the client’s request, and only then can the master node continue processing other messages from its message queue.

Write requests work a little bit differently because each replica must perform writes in the same order. When a replica receives a write request for a key, it also delegates that request to the master. However, when processing the write request, the master broadcasts that write request to all nodes in the cluster, and waits for acknowledgments from all the replicas before it can continue executing. After all the nodes have received the write request, the replica can send an OK message to the client, informing it that the write request was successfully delivered.

Another viable implementation of linearizability is to have a total order multicast sequence in the cluster. This means that each node maintains a message queue, and you must ensure that every message queue in all nodes follows the same order. Of course, this increases the latency of client requests because the nodes must all communicate with each other, on each client request rather than communicating through a single master.

Sequential Consistency

Sequential consistency is less strict than linearizability, which means that it also performs better in terms of latency. This model can be best summarized by a primary-based local read architecture.

The model follows the same primary-based approach in the linearizability implementation discussed above: there is one master node with N replicas. In addition, writes follow the same logic: all write requests must go through the leader node, which the leader then propagates this write request to all the replicas and waits for acknowledgments from each replica.

Where sequential consistency differs is on read requests. When a replica receives a read request, it can just serve its local value for that key and return it to the client, even if the key is outdated. This makes read requests extremely quick as nothing has to go through the master node, and there is no need for acknowledgments.

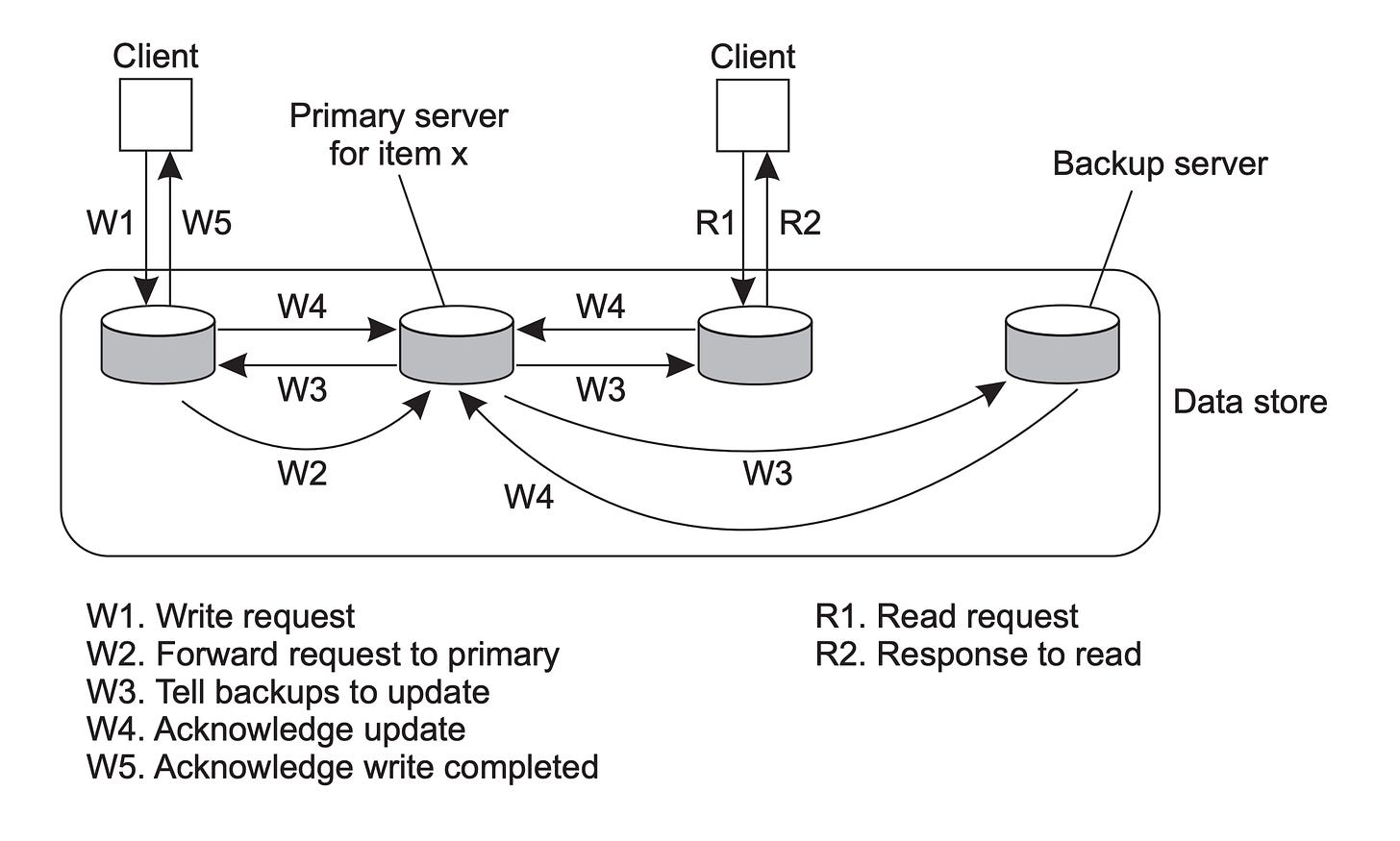

This diagram below gives a good visual summary of how sequential consistency is implemented:

Source: Unkown

Eventual Consistency

Eventual consistency is the most relaxed model on the list, which makes it the fastest in terms of serving read and write requests.

The idea behind eventual consistency is that all nodes will converge to have the same keys and values eventually (when there aren’t many write requests). You can serve outdated read request values as many times as you want, but eventually, all nodes will maintain the same state.

Implementation

There are many ways to implement eventual consistency. However you decide to implement it, you must have a way to ensure that all of the nodes process the same write requests in the same order.

To do this, we can use a total order multicast approach, which makes each node in the cluster maintain a message queue for write requests. To maintain the ordering of write requests, each node maintains its own Lamport clock timestamp (an integer value initialized to 0, and incremented after every write request). If a node receives a write request, it increments its Lamport clock and adds the write message to its own message queue, along with a copy of the value of its Lamport clock. The node also broadcasts that write requests to all nodes in the cluster (also with its Lamport clock included in this message).

When a node receives a write message from another node in the cluster, it adds it to its own message queue and sorts the message queue based on the Lamport clock value associated with each message in ascending order (if two clocks have the same value, there must be a common way to break these ties). The node then sends an acknowledgment to all nodes in the cluster, informing them that it has received that write request (it does not yet process it).

The node can only process a message from its message queue if the two following rules hold:

The message is at the head of the message queue

All of the nodes in the cluster have acknowledged receiving this message.

This way, we can guarantee no conflicts between write requests, and that every node in the cluster will eventually converge into the same state.

As I previously mentioned, read requests can simply be served locally, without any communication with other nodes.

An alternative, simple approach is to use a looser version of the primary-based model (without acknowledgments).

Causal Consistency

Causal consistency follows the same principle as sequential consistency but adds one simple tweak: all writes that are causally related must be processed by all nodes in the same order. This means that nodes can process some writes in a different order, but any writes that are causally related (i.e.: client writes X, reads key X, then writes a different key Y) must be processed in the same order for all nodes in the cluster.

To elaborate on this idea, imagine we have 3 nodes in our cluster. A client connects to one of the nodes and writes the value dog for the key pet. The same client sends a request to read the value for the key pet, and then writes a new entry, setting favorite_dog to mack. All of these three events are causally related, and thus the two writes for keys dogand favorite_dog must be processed in the same order for all the nodes in the cluster.

This consistency model doesn’t worry about ordering writes that are not casually related, and thus nodes can process unrelated writes in different orders. One downside to this approach is that you cannot guarantee that the nodes in your cluster will ultimately converge to having the same state. However, you can add guarantees for ultimate consistency, which I will discuss in the implementation details below.

Implementation

Similar to sequential consistency, we can use a primary-based approach. There will be one leader node and N replicas. In addition, all writes will be propagated to the master node, which then will broadcast them to all replicas and wait for acknowledgments from each. This architecture guarantees that all nodes will process writes in the same order.

To guarantee the causality relationship between nodes, we must introduce Lamport clocks to all nodes in the cluster.

Whenever the master node receives a write request, it will increment its own Lamport clock, and send it along with the write command to all replicas. When a replica receives a write request from the master, it will update its own Lamport timestamp to be equal to the one included in the write request. Communication between the master and replicas can happen using a variation of the total order multicast implementation discussed previously, or by using a pub/sub messaging approach (Apache Kafka, ZeroMQ, etc).

To sustain the causal relationship, the client must also keep track of its own Lamport clock, which means that nodes will need to include their own Lamport clock in the response messages for the client to parse. For example, rather than replying with OK, replicas will instead reply with OK 3.

Now, whenever a client issues a read request, it can optionally include that timestamp with the read message. If a node receives a read request accompanied by a timestamp from the client, it can only serve that request if the following condition holds:

The node’s own timestamp is greater than or equal to the client’s timestamp

If this is not the case, we know that the replica is in an inconsistent state and has still not processed all the incoming writes. The replica must hold off on processing the client’s request and only continue when its local timestamp reaches the client’s timestamp.

Sharding

Sharding is possibly the most popular method on the list. Although it’s not necessarily a consistency model, it offers an optimal way of handling data in a distributed environment.

In simple terms, sharding works by partitioning the data on different nodes. Each node becomes responsible for handling reads/writes for a subset of the data. For example, in our key-value database, we can partition the data by keys names: node #1 becomes responsible for handling reads & writes for any key that starts with letters A - H, node #2 is responsible for keys that start with I - Q, and node #3 handles keys starting with letters R - Z. The workload is thus split amongst the nodes, and they don’t need to communicate to manage consistency.

Sharding especially works best in write-heavy systems. However, In a write-heavy and read-heady system, each shard can basically be its own cluster and have its own replicas that implement one of the consistency models discussed above. This method is used by popular databases like MongoDB and Cassandra.

Implementation

How do you know which shard to connect to when you want to read/write a new key? Well, you define that and make it publicly known to all clients. For example, you can define that keys starting with letters A - H goes to node #1, any key that starts with I - Q goes to node #2, and any key that starts with I - Q goes to node #3.

If a node receives a key that starts with a letter that it’s not responsible for maintaining, it can simply reject that request, and respond with INVALID_KEY. Alternatively, it can re-route that request to whatever node is handling keys within that key range.

One downside to this approach is that it’s possible for the workload to not be evenly distributed amongst nodes. What if most of the keys in your database start with the letter A? Then node #1 will handle a lot of the load.

To fix that, we can use an algorithm (used by Cassandra) called Consistent Hashing. Consistent hashing aims to solve this uneven workload issue by hashing the given key, which converts the key to a unique hash value (ex: key dog is hashed to 9zf1. You can then re-implement the sharding rules for your cluster based on the hashing algorithm you choose (node #1 handles keys starting with 1-3, node #2 handles keys that start with 4-6, and node #3 handles keys starting with 7-9). Ultimately, this method produces a better way of handling workload distribution amongst your cluster.

(PS: check out this awesome article from Notion about how they planned to shard their PosgreSQL database: https://www.notion.so/blog/sharding-postgres-at-notion)

Where’s the Code?

I implemented these consistency models, in Rust and with unit tests, on my GitHub page here: https://github.com/tk04/distributed_db_consistency_models.